Assembly inclusion proof demo

In my last post I outlined the idea for providing inclusion proofs that demonstrate that your contribution to an assembly was really included in the synthesis generated by an LLM.

Here, I’m sharing a prototype to show how this would work.

The steps are as follows:

We start an assembly. We run various forms of assembly, the simplest is a “real-time consensus” assembly, where we start with an initial position (or a set of initial seed questions) and provide an email address where participants can send their feedback. As the feedback arrives, it is passed to an LLM to synthesise, with the outcome being that the position statement is updated to reflect the new global consensus. Typically this is done periodically or after N responses have been received rather than being truly real-time.

Each time there is a new synthesis, a set of consensus metrics is gathered. The individual contributions are hashed, and for each batch a Merkle tree is constructed. Each individual receives a proof along with the updated statement and associated metrics.

The participant can then verify for themselves that their contribution was truly included in the synthesis, by checking that their response (which they can retrieve from their sent mail) yields the correct root hash when it is hashed against the “siblings” provided in the proof.

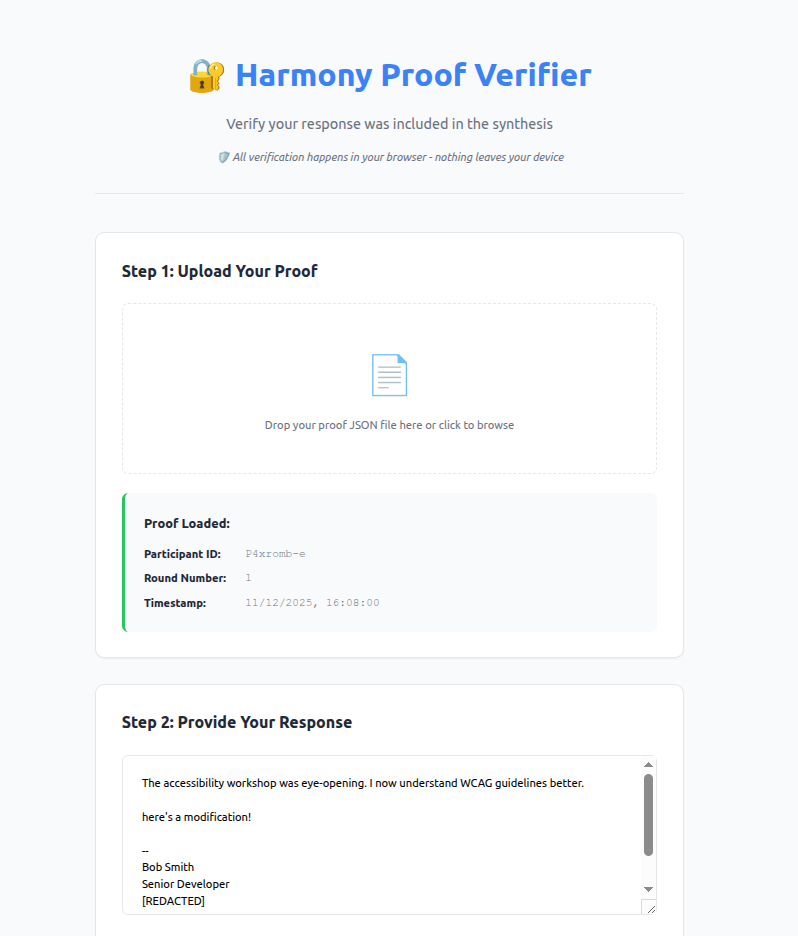

I went ahead and implemented this, and whipped up a small web app to demonstrate how this works. You can try it here.

To test it, you need to pretend you participated in an assembly. This means you would have sent an email with some opinion or feedback to Harmony by email and at the end of the round you received a synthesis report and your inclusion proof. Instead, you can paste the information below and save them as files to upload into the UI. Nothing leaves your machine - all the processing is done locally in the browser.

Proof

Paste the following into a file and save it as proof.json

{

“participantId”: “P4xromb-e”,

“roundNumber”: 1,

“leafHash”: “3f46f5ce837aba298778c3f7b8bd83e7eafd3b6d7d87fee3217d2c7021af2b71”,

“merkleRoot”: “871d52f68d615801814207bcd19499bf68c4510b806d820911556c779177f18b”,

“proof”: [

{

“position”: “left”,

“data”: “c7bec42c9b0bee7d9ffc87500aec4ec7abec6777f5b438236d50bce81fbde714”

},

{

“position”: “right”,

“data”: “817a68bea804e0f6e2a19721515752235f9e560e381658be3e46af290377b5b4”

}

],

“timestamp”: “2025-12-11T16:08:00.007Z”,

“algorithm”: “sha256”,

“version”: “1.0”,

“generated_at”: “2025-12-12T14:26:13.431Z”

}

Passing response

Paste the following response into a file and save it, or paste the text directly into the UI. This is the CORRECT email - the one you’d retrieve from your sent-mail. It hasn’t been tampered with and we’d expect the verification to PASS. Do not include the section dividers.

The accessibility workshop was eye-opening. I now understand WCAG guidelines better.

--

Bob Smith

Senior Developer

[REDACTED]

+1-555-0123

Failing response

Also try pasting the following response into a file and save it, or paste the text directly into the UI. This is the INCORRECT email - your original response has been tampered with! We’d expect the verification to FAIL.

The accessibility workshop was eye-opening. I now understand WCAG guidelines better.

here’s a modification!

--

Bob Smith

Senior Developer

[REDACTED]

+1-555-0123

Limitations and next steps

As you can probably tell, this is a work in progress! Notice also that this proof doesn’t demonstrate that the model did anything useful with your response, but it does confirm that your response was in the set that was passed to the model and that it wasn’t tampered with. Although, if the assembly operators were really malicious, they could generate these proofs and then pass different information to the model - these proofs don’t actually address that risk, there’s still a requirement to trust the operator. I’m not sure how to close that gap yet - one potential avenue is to get the LLM to generate a root hash from the set of contributions during it’s runtime and post that proof somewhere - maybe a blockchain, which can be verified against the root shared with participants in their proofs. I’m not convinced we can get to absolute trustlessness in this system, but we can layer up proof systems, open-source the code and publish extensive logs to minimise the opportunities for malicious operators or participants. Suggestions welcome!